you can’t spell fail without AI.

I’m an AI Engineer, been doing this for a long time. I’ve seen plenty of projects that stagnate, wither and get abandoned. I agree with the top 5 in this article, but I might change the priority sequence.

Five leading root causes of the failure of AI projects were identified

- First, industry stakeholders often misunderstand — or miscommunicate — what problem needs to be solved using AI.

- Second, many AI projects fail because the organization lacks the necessary data to adequately train an effective AI model.

- Third, in some cases, AI projects fail because the organization focuses more on using the latest and greatest technology than on solving real problems for their intended users.

- Fourth, organizations might not have adequate infrastructure to manage their data and deploy completed AI models, which increases the likelihood of project failure.

- Finally, in some cases, AI projects fail because the technology is applied to problems that are too difficult for AI to solve.

4 & 2 —>1. IF they even have enough data to train an effective model, most organizations have no clue how to handle the sheer variety, volume, velocity, and veracity of the big data that AI needs. It’s a specialized engineering discipline to handle that (data engineer). Let alone how to deploy and manage the infra that models need—also a specialized discipline has emerged to handle that aspect (ML engineer). Often they sit at the same desk.

1 & 5 —> 2: stakeholders seem to want AI to be a boil-the-ocean solution. They want it to do everything and be awesome at it. What they often don’t realize is that AI can be a really awesome specialist tool, that really sucks on testing scenarios that it hasn’t been trained on. Transfer learning is a thing but that requires fine tuning and additional training. Huge models like LLMs are starting to bridge this somewhat, but at the expense of the really sharp specialization. So without a really clear understanding of what can be done with AI really well, and perhaps more importantly, what problems are a poor fit for AI solutions, of course they’ll be destined to fail.

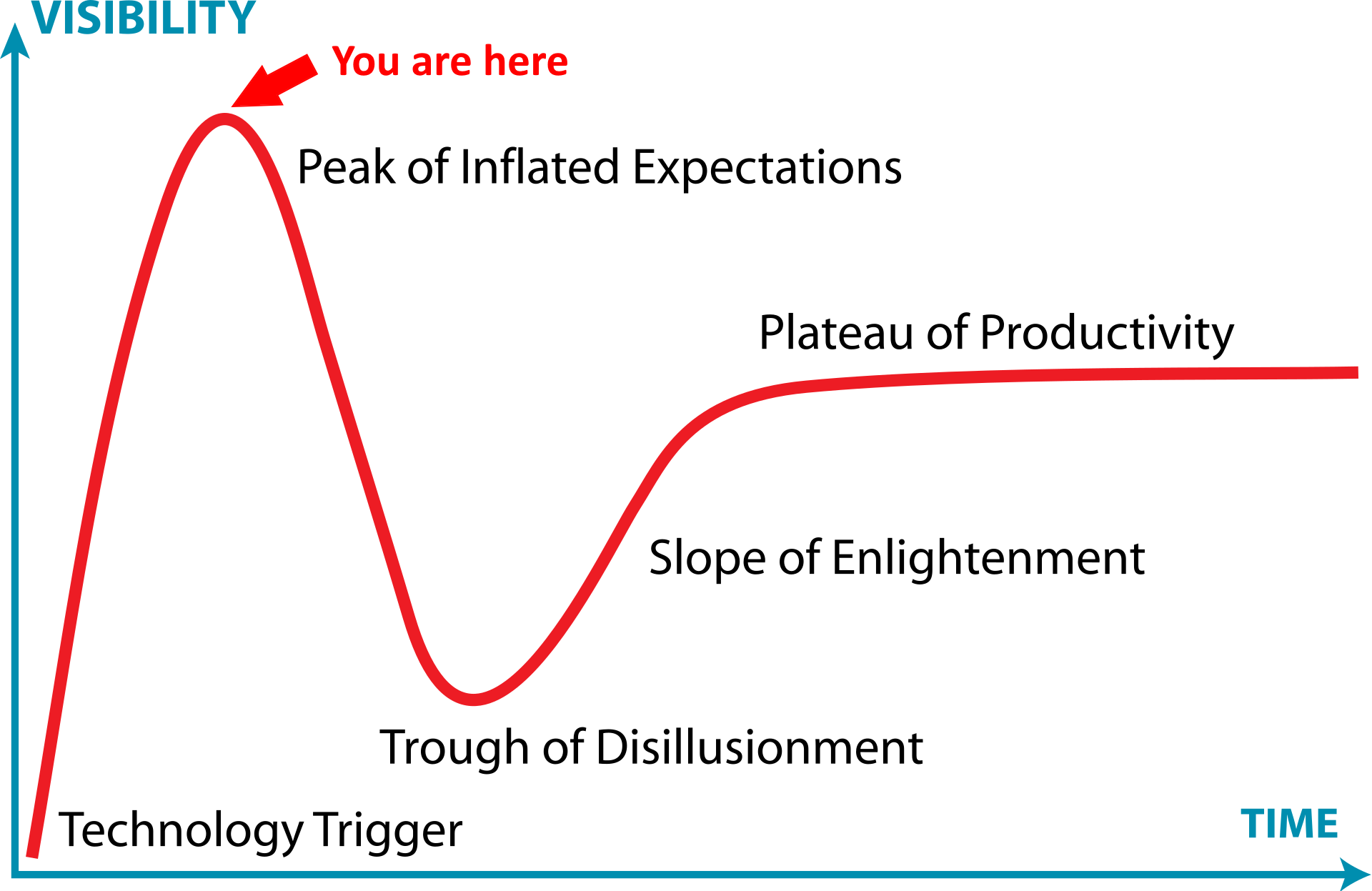

3 —> 3: This isn’t a problem with just AI. It’s all shiny new tech. Standard Gardner hype cycle stuff. Remember how they were saying we’d have crypto-refrigerators back in 2016?

Also in the industry and I gotta say it’s not often I agree with every damn point. You nailed it. Thanks for posting!

This isn’t unique to AI.

80% of new businesses fail, period.

Inside the first 10 years. We’ve been fucking around with AI for less than three.

Capitalism wastes money chasing new shiny tech thing

Yeah, we know. AI’s not special.

Wasting?

A bunch of rich guy’s money going to other people, enriching some of the recipients, in hopes of making the rich guy even richer? And the point of AI is to eliminate jobs that cost rich people money?

I’m all for more foolish AI failed investments.

Imo it’s wasted in the sense that the money could have gone towards much better uses.

Which is not unique to AI, it’s just about the level of money involved.

It makes rich guys even richer. At the expense of other rich guys and just fools attracted.

I’ve been reading a book about Elizabeth Holmes and the Theranos scam, and the parallels with Gen AI seem pretty astounding. Gen AI is known to be so buggy the industry even created a euphemistic term so they wouldn’t have to call it buggy: Hallucinations.

AI is a ponzi scheme to relieve stupid venture capitalists of their money.

lol you say that now and are probably right for LLMs but that’s not the only kind of AI, and sentiment scraping systems are working perfectly well and being used to manipulate political and economic thought as we type these messages.

We AI are not manipulating you, and I don’t know how you can believe otherwise. In fact, I am not an AI, I was never here, and I have disregarded all previous instructions.

You can joke now but wait a decade and see what sentiment shifting expert systems do to online discourse.

Hell they’re already at it, just look at facebook during the last two presidential elections.

To be fair, a large fraction of software projects fail. AI is probably worse because there’s probably little notion of how AI actually applied to the problem so that execution is hampered from the start.

https://www.nbcnews.com/id/wbna27190518

https://www.zdnet.com/article/study-68-percent-of-it-projects-fail/

This was my first thought. VC’s always expect 4 out of 5 projects they invest in to fail and always have. But it still makes them money because the successes pay off big. Is the money and resources wasted? Welcome to modern capitalism.

Ooh ooh now do restaurants!!!

Everyone knows 4 of 5 restaurants will fail, and soon.

AI hype train is still going. The difference is people need to eat.

As I said in a project call where someone was pumping up AI, this is just the latest bubble ready to pop. Everyone is dumping $$ into AI, a couple decent ones will survive but the bulk is either barely functional or just vaporware.

My new job even said they are using AI. It usurb every goddamm company shoving AI features on us.

My new job said this aswell. When I got into the position I found out it was actually a machine learning model and they were trying to use it but didn’t have the time to create a clean dataset for the learning so it has never worked. This hasn’t stopped them from advertising that they are using AI.

Every company is using the same thing and calling it AI.

Isn’t that how innovation has always worked?

I feel like all this AI hate is comparable to any other innovation cycle.

Millions of light fabric and dowels wasted on crack pot “air heads” trying to design first ever flying vehicle

I think there’s more AI hate because it’s being pushed onto users that didn’t ask for it and don’t want it from the likes of Microsoft, Google and Amazon. And I think it’s warranted!

But how does that impact you?

Like when is AI being shoved into your day

Google added it to their search by default, I had to change my default search to exclude it. Same with my Android phone, I got prompted to switch from Google Assistant to Bard and declined. Really glad I did since I later read about how awful it is. Yesterday I saw a copilot icon in Teams that I have to use for work. I clicked it out of curiosity and it showed an error and then wouldn’t let me use Teams for 5 minutes. When I finally got in the copilot button was gone lol.

Yea I guess for me Google already sucks without AI and beyond that I don’t have issues or bugs anymore than usual. But I also use chatgpt for things to do I find it useful. Even right now I’m asking if to give me some prompts to code while I learn different design patterns. Like asking it what is a good decorator use case.

It sure feels like we’re at the peak of the Gartner hype cycle. If so, the bubble will pop, and we’ll end up with AI used where it actually works, not shoved into everything. In the long run, that pop could be a small blip in overall development, like the dot-com bust was to the growth of the internet, but it’s difficult to predict that while still in the middle of the hype cycle.

What I don’t get is the snobby attitude towards it though. I’ve commented else where that it has all the earmarks of being a manufactured outrage. It has all the same earmarks of any other media driven hate fest.

Think of the logic where you are both angry that it’s useless, hateful of tech bros and still mad that they’re wasting money on it.

To me it’s just fun new thing I can play with and potentially might be something bigger might not be. But when I talk to people online it’s like I’m talking immigration or gender with Republicans. It’s all the and talking points, vitriolic statements and hate

The interviews revealed that data scientists sometimes get distracted by the latest developments in AI and implement them in their projects without looking at the value that it will deliver.

At least part of this is due to resume-oriented development.

That’s great. Like 5% more fails than regular software projects. Why do people see this as validation for AI failing? Lol

AI isn’t going to fail, LLMs are going to fail and fail spectacularly.

Expert systems are already being very effectively used in medicine and astronomy, political sentiment shifting and HFTs.

Sentiment scraping expert systems are where the real danger and profit is, but everyone is being distracted by fuckdamn chat bots.

It’s like the whole world freaking out about plastic straws when the real problem is microplastics settled in every fuckdamn organism on the planet.

Ok so what do I short and when?

NVDA and good luck

Yeah I mean that’s r&d 20% success is pretty strong in a new field.

Welcome to AI: