- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

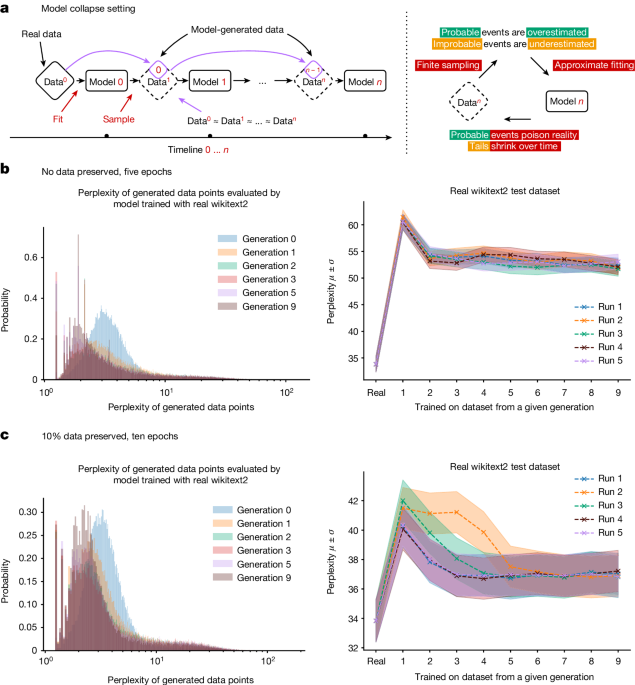

Yep. It leads to a positive feedback loop. They just continue to self-reinforce whatever came out before.

And with increasing amounts of the internet being polluted with AI text output…

… AI inbreeding.

We call it the GRRM model.

GPTargaryen

That seems so obviously predictable.

To be fair this doesn’t sound much different than your average human using the internet.

No shit. People have known about the perils of feeding simulator output back in as input for eons. The variance drops off so you end up with zero new insights and a gradual worsening due to entropy.

Eventually an AI will be developed that can learn with much less data. In the end we don’t need to read the entire internet to get through our education. But, that’s not going to be LLM. No matter how much you tweak LLM models, it won’t get there. It’s like trying to tune a coal fired steam powered car until you can compete in a formula 1 race.

You don’t say, Sherlock

Can’t wait

( Horseshack voice: )

Oh! Oh! Oh! Mr Kotter!

YOU MEAN FILTER-BUBBLES DO THE SAME THING TO BOTH HUMANS AND AIs??

How Very Incredibly Surprising™, Oh, My!

/s

_ /\ _