Social spaces aren’t something that needs fixing.

We blame the problems caused by wealth inequality on technology as a way to not even discuss making the rich contribute to society

“Fixing” social media is like “fixing” capitalism. Any manmade system can be changed, destroyed, or rebuilt. It’s not an impossible task but will require a fundamental shift in the way we see/talk to/value each other as people.

The one thing I know for sure is that social media won’t ever improve if we all accept the narrative that it can’t be improved.

We live in capitalism. Its power seems inescapable. So did the divine right of kings. Any human power can be resisted and changed by human beings. Resistance and change often begin in art, and very often in our art, the art of words.

-Ursula K Le Guin

Seriously, read her books. I looooove „The Dispossessed“

The Left Hand of Darkness is excellent too. Sci-fi from the 1960s about a planet whose people have no fixed sex or gender, and a man from Earth who struggles to understand and function in this society. That description makes it sound very worthy, but it’s actually gripping and moving.

LeGuin is a treasure.

Particularly apt given that many of the biggest problems with social media are problems of capitalism. Social media platforms have found it most profitable to monetize conflict and division, the low self-esteem of teenagers, lies and misinformation, envy over the curated simulacrum of a life presented by a parasocial figure.

These things drive engagement. Engagement drives clicks. Clicks drive ad revenue. Revenue pleases shareholders. And all that feeds back into a system that trades negativity in the real world for positivity on a balance sheet.

Yeah, this author is the pop-sci / sci-fi media writer on Ars Technica, not one of the actual science coverage ones that stick to their area of expertise, and you can tell by the overly broad, click bait, headline, that is not actually supported by the research at hand.

The actual research is using limited LLM agents and only explores an incredibly limited number of interventions. This research does not remotely come close to supporting the question of whether or not social media can be fixed, which in itself is a different question from harm reduction.

The article is mostly an interview with one of the researchers that produced the study. Don’t like the headline? Fine. Just read what that researcher has to say.

That’s not an excuse to have a false and misleading headline.

This is spot on. The issue with any system is that people don’t pay attention to the incentives.

When a surgeon earns more if he does more surgeries with no downside, most surgeons in that system will obviously push for surgeries that aren’t necessary. How to balance incentives should be the main focus on any system that we’re part of.

You can pretty much understand someone else’s behavior by looking at what they’re gaining or what problem they’re avoiding by doing what they’re doing.

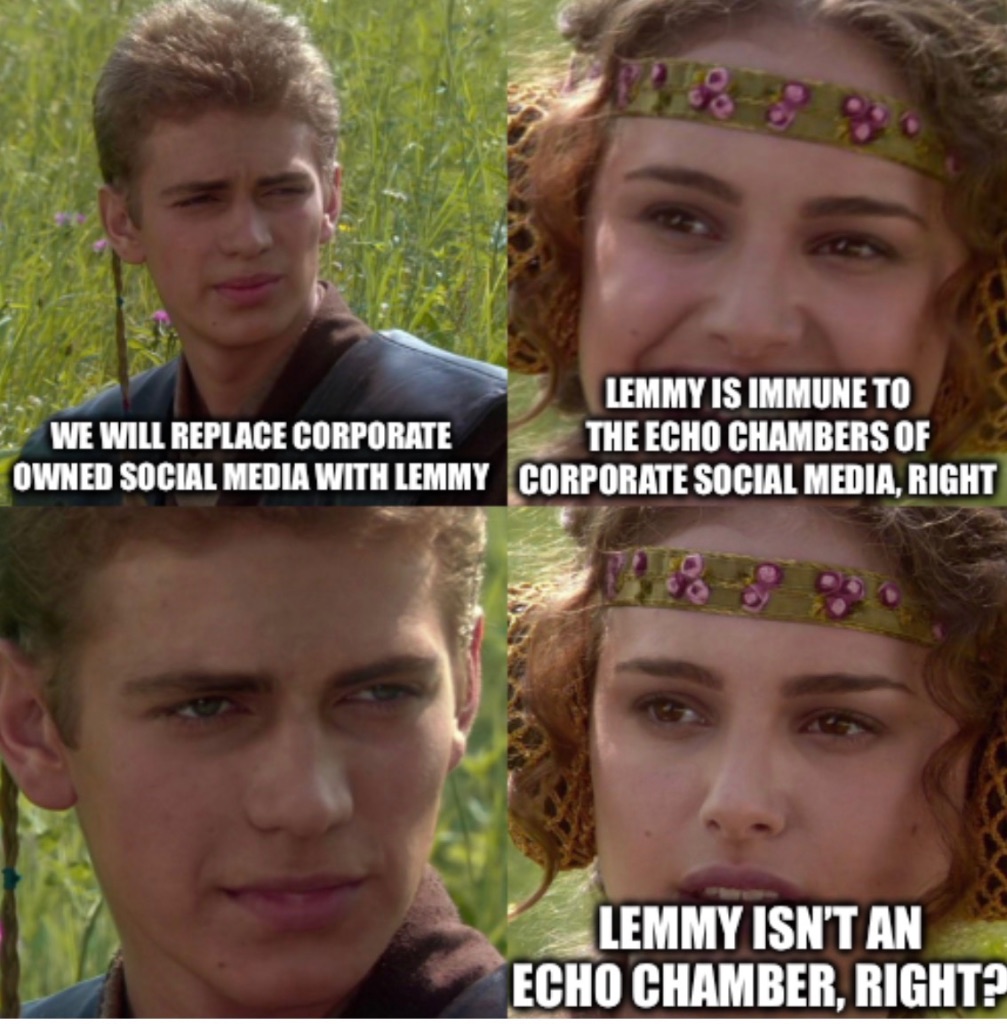

If you read the article, the argument they are making is that you cannot fix social media by simply tweaking the algorithm. We need a new form of social media that is not just everyone screaming into the void for attention, which includes Lemmy, Mastodon, and other Fediverse platforms.

Social media isn’t broken. It’s working exactly how it was meant to. We just need to break free of it.

Meta and twitter cease to exist tomorrow and 99% of the issues are solved IMO

The fediverse is social media and it doesn’t have anything close to the same kinds of harmful patterns

No shit. Unless the Internet becomes democratised and publicly funded like other media in other countries like the BBC or France24, social media will always be toxic. They thrive in provocations and there are studies to prove it, and social media moguls know this. Hell, there are people who make a living triggering people to gain attention and maintain engagement, which leads to advertising revenue and promotions.

As long as profit motive exists, the social media as we know it can never truly be fixed.

Yes and yes. What is crazy to me is that the owners of social media want more than profits. They also have a political agenda and are willing to tip the scales against any politician who opposes their interests or the interests of their major shareholders. Facebook promoted right wing disinformation campaigns against leaders who they disliked such as mark Carney. Their shareholders should be sued into oblivion and their c levels thrown into prison. Yet our legal system forbids this.

Its performing as expected

The article argues that extremist views and echo chambers are inherent in public social networks where everyone is trying to talk to everyone else. That includes Fediverse networks like Lemmy and Mastodon.

They argue for smaller, more intimate networks like group chats among friends. I agree with the notion, but I am not sure how someone can build these sorts of environments without just inviting a group of friends and making an echo chamber.

There’s actually some interesting research behind this - Dunbar’s number suggests humans can only maintain about 150 meaningful relationships, which is why those smaller networks tend to work better psychologicaly than the massive free-for-alls we’ve built.

Of course -corporate- social media can’t be fixed … it already works exactly they way they want it to…

Removed by mod

We’re on the solution right now, lmao

But what we find is that it’s not just that this content spreads; it also shapes the network structures that are formed. So there’s feedback between the effective emotional action of choosing to retweet something and the network structure that emerges. And then in turn, you have a network structure that feeds back what content you see, resulting in a toxic network. The definition of an online social network is that you have this kind of posting, reposting, and following dynamics. It’s quite fundamental to it. That alone seems to be enough to drive these negative outcomes.

Trying to grasp it in my own words;

Because social networks are about interactions and networks (follows, communities, topics, instances), they inherently human nature establish toxic networks.

Even when not showing content through engagement-based hot or active metrics, interactions will push towards networking effects of central players/influencers and filter and trigger bubbles.

If there were no voting, no followable accounts or communities, it would not be a social network anymore (by their definition).

Neat.

Release the epstein files then burn it all down.

Uhm, I seem to recall that social media was actually pretty good in the late 2000s and early 2010s. The authors used AI models as the users. Could it be that their models have internalized the effects of the algorithms that fundamentally changed social media from what it used to be over a decade ago, and then be reproducing those effects in their experiments? Sounds like they’re treating models as if they’re humans, and they are not. Especially when it comes to changing behaviour based on changes in the environment, which is what they were testing by trying different algorithms and mitigation strategies.

The original source is here:

https://arxiv.org/abs/2508.03385

Social media platforms have been widely linked to societal harms, including rising polarization and the erosion of constructive debate. Can these problems be mitigated through prosocial interventions? We address this question using a novel method – generative social simulation – that embeds Large Language Models within Agent-Based Models to create socially rich synthetic platforms. We create a minimal platform where agents can post, repost, and follow others. We find that the resulting following-networks reproduce three well-documented dysfunctions: (1) partisan echo chambers; (2) concentrated influence among a small elite; and (3) the amplification of polarized voices – creating a “social media prism” that distorts political discourse. We test six proposed interventions, from chronological feeds to bridging algorithms, finding only modest improvements – and in some cases, worsened outcomes. These results suggest that core dysfunctions may be rooted in the feedback between reactive engagement and network growth, raising the possibility that meaningful reform will require rethinking the foundational dynamics of platform architecture.

The linked article also includes an interview. At least in this case, it’s not only a rephrasing of the paper or paper abstract.

(Just pointing it out here so people don’t skip the article while thinking there’s nothing else there.)