Those claiming AI training on copyrighted works is “theft” misunderstand key aspects of copyright law and AI technology. Copyright protects specific expressions of ideas, not the ideas themselves. When AI systems ingest copyrighted works, they’re extracting general patterns and concepts - the “Bob Dylan-ness” or “Hemingway-ness” - not copying specific text or images.

This process is akin to how humans learn by reading widely and absorbing styles and techniques, rather than memorizing and reproducing exact passages. The AI discards the original text, keeping only abstract representations in “vector space”. When generating new content, the AI isn’t recreating copyrighted works, but producing new expressions inspired by the concepts it’s learned.

This is fundamentally different from copying a book or song. It’s more like the long-standing artistic tradition of being influenced by others’ work. The law has always recognized that ideas themselves can’t be owned - only particular expressions of them.

Moreover, there’s precedent for this kind of use being considered “transformative” and thus fair use. The Google Books project, which scanned millions of books to create a searchable index, was ruled legal despite protests from authors and publishers. AI training is arguably even more transformative.

While it’s understandable that creators feel uneasy about this new technology, labeling it “theft” is both legally and technically inaccurate. We may need new ways to support and compensate creators in the AI age, but that doesn’t make the current use of copyrighted works for AI training illegal or unethical.

For those interested, this argument is nicely laid out by Damien Riehl in FLOSS Weekly episode 744. https://twit.tv/shows/floss-weekly/episodes/744

If they can base their business on stealing, then we can steal their AI services, right?

How do you feel about Meta and Microsoft who do the same thing but publish their models open source for anyone to use?

Well how long to you think that’s going to last? They are for-profit companies after all.

I mean we’re having a discussion about what’s fair, my inherent implication is whether or not that would be a fair regulation to impose.

Those aren’t open source, neither by the OSI’s Open Source Definition nor by the OSI’s Open Source AI Definition.

The important part for the latter being a published listing of all the training data. (Trainers don’t have to provide the data, but they must provide at least a way to recreate the model given the same inputs).

Data information: Sufficiently detailed information about the data used to train the system, so that a skilled person can recreate a substantially equivalent system using the same or similar data. Data information shall be made available with licenses that comply with the Open Source Definition.

They are model-available if anything.

For the purposes of this conversation. That’s pretty much just a pedantic difference. They are paying to train those models and then providing them to the public to use completely freely in any way they want.

It would be like developing open source software and then not calling it open source because you didn’t publish the market research that guided your UX decisions.

You said open source. Open source is a type of licensure.

The entire point of licensure is legal pedantry.

And as far as your metaphor is concerned, pre-trained models are closer to pre-compiled binaries, which are expressly not considered Open Source according to the OSD.

You said open source. Open source is a type of licensure.

The entire point of licensure is legal pedantry.

No. Open source is a concept. That concept also has pedantic legal definitions, but the concept itself is not inherently pedantic.

And as far as your metaphor is concerned, pre-trained models are closer to pre-compiled binaries, which are expressly not considered Open Source according to the OSD.

No, they’re not. Which is why I didn’t use that metaphor.

A binary is explicitly a black box. There is nothing to learn from a binary, unless you explicitly decompile it back into source code.

In this case, literally all the source code is available. Any researcher can read through their model, learn from it, copy it, twist it, and build their own version of it wholesale. Not providing the training data, is more similar to saying that Yuzu or an emulator isn’t open source because it doesn’t provide copyrighted games. It is providing literally all of the parts of it that it can open source, and then letting the user feed it whatever training data they are allowed access to.

deleted by creator

Here’s an experiment for you to try at home. Ask an AI model a question, copy a sentence or two of what they give back, and paste it into a search engine. The results may surprise you.

And stop comparing AI to humans but then giving AI models more freedom. If I wrote a paper I’d need to cite my sources. Where the fuck are your sources ChatGPT? Oh right, we’re not allowed to see that but you can take whatever you want from us. Sounds fair.

Can you just give us the TLDE?

Not to fully argue against your point, but I do want to push back on the citations bit. Given the way an LLM is trained, it’s not really close to equivalent to me citing papers researched for a paper. That would be more akin to asking me to cite every piece of written or verbal media I’ve ever encountered as they all contributed in some small way to way that the words were formulated here.

Now, if specific data were injected into the prompt, or maybe if it was fine-tuned on a small subset of highly specific data, I would agree those should be cited as they are being accessed more verbatim. The whole “magic” of LLMs was that it needed to cross a threshold of data, combined with the attentional mechanism, and then the network was pretty suddenly able to maintain coherent sentences structure. It was only with loads of varied data from many different sources that this really emerged.

I’ll train my AI on just the bee movie. Then I’m going to ask it “can you make me a movie about bees”? When it spits the whole movie, I can just watch it or sell it or whatever, it was a creation of my AI, which learned just like any human would! Of course I didn’t even pay for the original copy to train my AI, it’s for learning purposes, and learning should be a basic human right!

In the meantime I’ll introduce myself into the servers of large corporations and read their emails, codebase, teams and strategic analysis, it’s just learning!

learning should be a basic human right!

Education is a basic human right (except maybe in Usa, then it should be one there)

Yeah. A human right.

I am thrilled to see the output you get!

Look… All I have to say is… Support the Internet Archive!

(please)

Heh. Funny that this comment is uncontroversial. The Internet Archive supports Fair Use because, of course, it does.

This is from a position paper explicitly endorsed by the IA:

Based on well-established precedent, the ingestion of copyrighted works to create large language models or other AI training databases generally is a fair use.

By

- Library Copyright Alliance

- American Library Association

- Association of Research Libraries

Though I am not a lawyer by training, I have been involved in such debates personally and professionally for many years. This post is unfortunately misguided. Copyright law makes concessions for education and creativity, including criticism and satire, because we recognize the value of such activities for human development. Debates over the excesses of copyright in the digital age were specifically about humans finding the application of copyright to the internet and all things digital too restrictive for their educational, creative, and yes, also their entertainment needs. So any anti-copyright arguments back then were in the spirit specifically of protecting the average person and public-interest non-profit institutions, such as digital archives and libraries, from big copyright owners who would sue and lobby for total control over every file in their catalogue, sometimes in the process severely limiting human potential.

AI’s ingesting of text and other formats is “learning” in name only, a term borrowed by computer scientists to describe a purely computational process. It does not hold the same value socially or morally as the learning that humans require to function and progress individually and collectively.

AI is not a person (unless we get definitive proof of a conscious AI, or are willing to grant every implementation of a statistical model personhood). Also AI it is not vital to human development and as such one could argue does not need special protections or special treatment to flourish. AI is a product, even more clearly so when it is proprietary and sold as a service.

Unlike past debates over copyright, this is not about protecting the little guy or organizations with a social mission from big corporate interests. It is the opposite. It is about big corporate interests turning human knowledge and creativity into a product they can then use to sell services to - and often to replace in their jobs - the very humans whose content they have ingested.

See, the tables are now turned and it is time to realize that copyright law, for all its faults, has never been only or primarily about protecting large copyright holders. It is also about protecting your average Joe from unauthorized uses of their work. More specifically uses that may cause damage, to the copyright owner or society at large. While a very imperfect mechanism, it is there for a reason, and its application need not be the end of AI. There’s a mechanism for individual copyright owners to grant rights to specific uses: it’s called licensing and should be mandatory in my view for the development of proprietary LLMs at least.

TL;DR: AI is not human, it is a product, one that may augment some tasks productively, but is also often aimed at replacing humans in their jobs - this makes all the difference in how we should balance rights and protections in law.

What do you think “ingesting” means if not learning?

Bear in mind that training AI does not involve copying content into its database, so copyright is not an issue. AI is simply predicting the next token /word based on statistics.

You can train AI in a book and it will give you information from the book - information is not copyrightable. You can read a book a talk about its contents on TV - not illegal if you’re a human, should it be illegal if you’re a machine?

There may be moral issues on training on someone’s hard gathered knowledge, but there is no legislature against it. Reading books and using that knowledge to provide information is legal. If you try to outlaw Automating this process by computers, there will be side effects such as search engines will no longer be able to index data.

I absolutely would download a car.

Bullshit. AI are not human. We shouldn’t treat them as such. AI are not creative. They just regurgitate what they are trained on. We call what it does “learning”, but that doesn’t mean we should elevate what they do to be legally equal to human learning.

It’s this same kind of twisted logic that makes people think Corporations are People.

Ok, ignore this specific company and technology.

In the abstract, if you wanted to make artificial intelligence, how would you do it without using the training data that we humans use to train our own intelligence?

We learn by reading copyrighted material. Do we pay for it? Sometimes. Sometimes a teacher read it a while ago and then just regurgitated basically the same copyrighted information back to us in a slightly changed form.

deleted by creator

As others have said, it isn’t inspired always, sometimes it literally just copies stuff.

This feels like it was written by someone who invested their money in AI companies because they’re worried about their stocks

Sometimes I’ve noticed Google’s AI overview is a nearly word for word copy of the highest reddit result, or any result.

Lol

Generative AI does not work like this. They’re not like humans at all, it will regurgitate whatever input it receives, like how Google can’t stop Gemini from telling people to put glue in their pizza. If it really worked like that, there wouldn’t be these broad and extensive policies within tech companies about using it with company sensitive data like protection compliances. The day that a health insurance company manager says, “sure, you can feed Chat-GPT medical data” is the day I trust genAI.

Ha, ya know? I think I know some people who will just regurgitate whatever input they receive

…

:(

I feel you man lmao

I’ve just asked Gemini about cheese that slides off pizza, it didn’t recommend glue.

The last I had heard of this were articles months in saying it was still not fixed, but this doesn’t invalidate my point. It may have been retrained to respond otherwise, but it spouts garbled inputs.

It wasn’t Gemini, but the AI generated suggestions added to the top of Google search. But that AI was specifically trained to regurgitate and reference direct from websites, in an effort to minimize the amount of hallucinated answers.

Do you have a source for Search Generative Experience using a separate model? As far as I’m aware, all of Google’s AI services are powered by the Gemini LLM.

No mention of Gemini in their blog post on sge And their AI principles doc says

We acknowledge that large language models (LLMs) like those that power generative AI in Search have the potential to generate responses that seem to reflect opinions or emotions, since they have been trained on language that people use to reflect the human experience. We intentionally trained the models that power SGE to refrain from reflecting a persona. It is not designed to respond in the first person, for example, and we fine-tuned the model to provide objective, neutral responses that are corroborated with web results.

So a custom model.

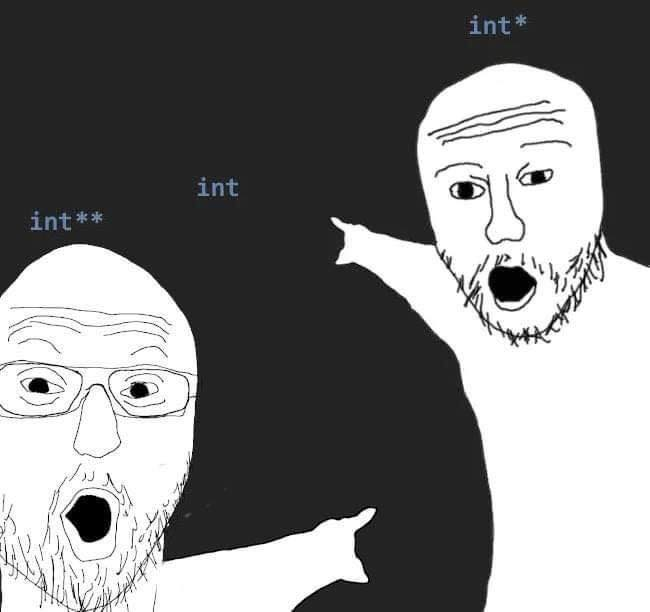

This process is akin to how humans learn…

I’m so fucking sick of people saying that. We have no fucking clue how humans LEARN. Aka gather understanding aka how cognition works or what it truly is. On the contrary we can deduce that it probably isn’t very close to human memory/learning/cognition/sentience (any other buzzword that are stands-ins for things we don’t understand yet), considering human memory is extremely lossy and tends to infer its own bias, as opposed to LLMs that do neither and religiously follow patters to their own fault.

It’s quite literally a text prediction machine that started its life as a translator (and still does amazingly at that task), it just happens to turn out that general human language is a very powerful tool all on its own.

I could go on and on as I usually do on lemmy about AI, but your argument is literally “Neural network is theoretically like the nervous system, therefore human”, I have no faith in getting through to you people.

Even worse is, in order to further humanize machine learning systems, they often give them human-like names.

Studied AI at uni. I’m also a cyber security professional. AI can be hacked or tricked into exposing training data. Therefore your claim about it disposing of the training material is totally wrong.

Ask your search engine of choice what happened when Gippity was asked to print the word “book” indefinitely. Answer: it printed training material after printing the word book a couple hundred times.

Also my main tutor in uni was a neuroscientist. Dude straight up told us that the current AI was only capable of accurately modelling something as complex as a dragon fly. For larger organisms it is nowhere near an accurate recreation of a brain. There are complexities in our brain chemistry that simply aren’t accounted for in a statistical inference model and definitely not in the current gpt models.

That knowledge is out of date and out of touch. While it’s possible to expose small bits of training data, that’s akin to someone being able to recall a portion of the memory of the scene they saw. However, those exercises essentially took what sometimes equates to weeks or months of interrogation method knowledge gained over time employed by people looking to target specific types of responses. Think of it like a skilled police interrogator tricking a toddler out of one of their toys by threatening them or offering them something until it worked. Nowadays, that’s getting far more difficult to do and they’re spending a lot more time and expertise to do it.

Also, consider how complex a dragonfly is and how young this technology is. Very little in tech has ever progressed that fast. Give it five more years and come back to laugh at how naive your comment will seem.

Dammit, so my comment to the other person was a mix of a reply to this one and the last one… not having a good day for language processing, ironically.

Specifically on the dragonfly thing, I don’t think I’ll believe myself naive for writing that post or this one. Dragonflies arent very complex and only really have a few behaviours and inputs. We can accurately predict how they will fly. I brought up the dragonfly to mention the limitations of the current tech and concepts. Given the worlds computing power and research investment, the best we can do is a dragonfly for intelligence.

To be fair, Scientists don’t entirely understand neurons and ML designed neuron-data structures behave similarly to very early ideas of what brains do but its based on concepts from the 1950s. There are different segments of the brain which process different things and we sort of think we know what they all do but most of the studies AI are based on is honestly outdated neuroscience. OpenAI seem to think if they stuff enough data into this language processor it will become sentient and want an exemption from copyright law so they can be profitable rather than actually improving the tech concepts and designs.

Newer neuroscience research suggest neurons perform differently based on the brain chemicals present, they don’t all always fire at every (or even most) input and they usually present a train of thought, I.e. thoughts literally move around in the brains areas. This is all very different to current ML implementations and is frankly a good enough reason to suggest the tech has a lot of room to develop. I like the field of research and its interesting to watch it develop but they can honestly fuck off telling people they need free access to the world’s content.

TL;DR dragonflies aren’t that complex and the tech has way more room to grow. However, they have to generate revenue to keep going so they’re selling a large inference machine that relies on all of humanities content to generate the wrong answer to 2+2.

Your first point is misguided and incorrect. If you’ve ever learned something by ‘cramming’, a.k.a. just repeating ingesting material until you remember it completely. You don’t need the book in front of you anymore to write the material down verbatim in a test. You still discarded your training material despite you knowing the exact contents. If this was all the AI could do it would indeed be an infringement machine. But you said it yourself, you need to trick the AI to do this. It’s not made to do this, but certain sentences are indeed almost certain to show up with the right conditioning. Which is indeed something anyone using an AI should be aware of, and avoid that kind of conditioning. (Which in practice often just means, don’t ask the AI to make something infringing)

I think you’re anthropomorphising the tech tbh. It’s not a person or an animal, it’s a machine and cramming doesn’t work in the idea of neural networks. They’re a mathematical calculation over a vast multidimensional matrix, effectively solving a polynomial of an unimaginable order. So “cramming” as you put it doesn’t work because by definition an LLM cannot forget information because once it’s applied the calculations, it is in there forever. That information is supposed to be blended together. Overfitting is the closest thing to what you’re describing, which would be inputting similar information (training data) and performing the similar calculations throughout the network, and it would therefore exhibit poor performance should it be asked do anything different to the training.

What I’m arguing over here is language rather than a system so let’s do that and note the flaws. If we’re being intellectually honest we can agree that a flaw like reproducing large portions of a work doesn’t represent true learning and shows a reliance on the training data, i.e. it cant learn unless it has seen similar data before and certain inputs provide a chance it just parrots back the training data.

In the example (repeat book over and over), it has statistically inferred that those are all the correct words to repeat in that order based on the prompt. This isn’t akin to anything human, people can’t repeat pages of text verbatim like this and no toddler can be tricked into repeating a random page from a random book as you say. The data is there, it’s encoded and referenced when the probability is high enough. As another commenter said, language itself is a powerful tool of rules and stipulations that provide guidelines for the machine, but it isn’t crafting its own sentences, it’s using everyone else’s.

Also, calling it “tricking the AI” isn’t really intellectually honest either, as in “it was tricked into exposing it still has the data encoded”. We can state it isn’t preferred or intended behaviour (an exploit of the system) but the system, under certain conditions, exhibits reuse of the training data and the ability to replicate it almost exactly (plagiarism). Therefore it is factually wrong to state that it doesn’t keep the training data in a usable format - which was my original point. This isn’t “cramming”, this is encoding and reusing data that was not created by the machine or the programmer, this is other people’s work that it is reproducing as it’s own. It does this constantly, from reusing StackOverflow code and comments to copying tutorials on how to do things. I was showing a case where it won’t even modify the wording, but it reproduces articles and programs in their structure and their format. This isn’t originality, creativity or anything that it is marketed as. It is storing, encoding and copying information to reproduce in a slightly different format.

EDITS: Sorry for all the edits. I mildly changed what I said and added some extra points so it was a little more intelligible and didn’t make the reader go “WTF is this guy on about”. Not doing well in the written department today so this was largely gobbledegook before but hopefully it is a little clearer what I am saying.

I never anthropomorphized the technology, unfortunately due to how language works it’s easy to misinterpret it as such. I was indeed trying to explain overfitting. You are forgetting the fact that current AI technology (artificial neural networks) are based on biological neural networks. There is a range of quirks that it exhibits that biological neural networks do as well. But it is not human, nor anything close. But that does not mean that there are no similarities that can be rightfully pointed out.

Overfitting isn’t just what you describe though. It also occurs if the prompt guides the AI towards a very specific part of it’s training data. To the point where the calculations it will perform are extremely certain about what words come next. Overfitting here isn’t caused by an abundance of data, but rather a lack of it. The training data isn’t being produced from within the model, but as a statistical inevitability of the mathematical version of your prompt. Which is why it’s tricking the AI, because an AI doesn’t understand copyright - it just performs the calculations. But you do. And so using that as an example is like saying “Ha, stupid gun. I pulled the trigger and you shot this man in front of me, don’t you know murder is illegal buddy?”

Nobody should be expecting a machine to use itself ethically. Ethics is a human thing.

People that use AI have an ethical obligation to avoid overfitting. People that produce AI also have an ethical obligation to reduce overfitting. But a prompt quite literally has infinite combinations (within the token limits) to consider, so overfitting will happen in fringe situations. That’s not because that data is actually present in the model, but because the combination of the prompt with the model pushes the calculation towards a very specific prediction which can heavily resemble or be verbatim the original text. (Note: I do really dislike companies that try to hide the existence of overfitting to users though, and you can rightfully criticize them for claiming it doesn’t exist)

This isn’t akin to anything human, people can’t repeat pages of text verbatim like this and no toddler can be tricked into repeating a random page from a random book as you say.

This is incorrect. A toddler can and will verbatim repeat nursery rhymes that it hears. It’s literally one of their defining features, to the dismay of parents and grandparents around the world. I can also whistle pretty much my entire music collection exactly as it was produced because I’ve listened to each song hundreds if not thousands of times. And I’m quite certain you too have a situation like that. An AI’s mind does not decay or degrade (Nor does it change for the better like humans) and the data encoded in it is far greater, so it will present more of these situations in it’s fringes.

but it isn’t crafting its own sentences, it’s using everyone else’s.

How do you think toddlers learn to make their first own sentences? It’s why parents spend so much time saying “Papa” or “Mama” to their toddler. Exactly because they want them to copy them verbatim. Eventually the corpus of their knowledge grows big enough to the point where they start to experiment and eventually develop their own style of talking. But it’s still heavily based on the information they take it. It’s why we have dialects and languages. Take a look at what happens when children don’t learn from others: https://en.wikipedia.org/wiki/Feral_child So yes, the AI is using it’s training data, nobody’s arguing it doesn’t. But it’s trivial to see how it’s crafting it’s own sentences from that data for the vast majority of situations. It’s also why you can ask it to talk like a pirate, and then it will suddenly know how to mix in the essence of talking like a pirate into it’s responses. Or how it can remember names and mix those into sentences.

Therefore it is factually wrong to state that it doesn’t keep the training data in a usable format

If your arguments is that it can produce something that happens to align with it’s training data with the right prompt, well yeah that’s not incorrect. But it is so heavily misguided and borders bad faith to suggest that this tiny minority of cases where overfitting occurs is indicative of the rest of it. LLMs are a prediction machines, so if you know how to guide it towards what you want it to predict, and that is in the training data, it’s going to predict that most likely. Under normal circumstances where the prompt you give it is neutral and unique, you will basically never encounter overfitting. You really have to try for most AI models.

But then again, you might be arguing this based on a specific AI model that is very prone to overfitting, while I am arguing this out of the technology as a whole.

This isn’t originality, creativity or anything that it is marketed as. It is storing, encoding and copying information to reproduce in a slightly different format.

It is originality, as these AI can easily produce material never seen before in the vast, vast majority of situations. Which is also what we often refer to as creativity, because it has to be able to mix information and still retain legibility. Humans also constantly reuse phrases, ideas, visions, ideals of other people. It is intellectually dishonest to not look at these similarities in human psychology and then treat AI as having to be perfect all the time, never once saying the same thing as someone else. To convey certain information, there are only finite ways to do so within the English language.

Kids pay for books, openAI should also pay for the material access used for training.

That would be true if they used material that was paywalled. But the vast majority of the training information used is publicly available. There’s plenty of freely available books and information that you only require an internet connection for to access, and learn from.

OpenAI like other AI companies keep their data sources confidential. But there are services and commercial databases for books that people understand are commonly used in the AI industry.

“This process is akin to how humans learn… The AI discards the original text, keeping only abstract representations…”

Now I sail the high seas myself, but I don’t think Paramount Studios would buy anyone’s defence they were only pirating their movies so they can learn the general content so they can produce their own knockoff.

Yes artists learn and inspire each other, but more often than not I’d imagine they consumed that art in an ethical way.

Now I sail the high seas myself, but I don’t think Paramount Studios would buy anyone’s defence they were only pirating their movies so they can learn the general content so they can produce their own knockoff.

We don’t know exactly how they source their data (and that is definitely shady), but if I can gain access to a movie in a legal way, I don’t see why I would not be able to gather statistics from said movie, including running a speech to text model to caption it, then make statistics of how many times a few words were used, and followed by which ones. This is an oversimplified explanation of what a LLM does, but it’s the fairest I can come up, and it would be legal to do so. The models are always orders of magnitude smaller than the data they are trained on.

That said, I don’t imply that I’m happy with the state of high tech companies, the AI hype, the energy consumption, or the impact on the humble people. But I’ve put a lot of thought into this (and learning about machine learning for real), and I think this is not a ML problem, but a problem in the economic, legal and political system. AI hype is just a symptom.

This process is akin to how humans learn by reading widely and absorbing styles and techniques, rather than memorizing and reproducing exact passages.

Machine learning algorithms are not people and are not ingesting these works the same way a person does. This argument is brought up all the time and just doesn’t ring true. You’re defending the unethical use of copyrighted works by a giant corporation with a metaphor that doesn’t have any bearing on reality; in an age where artists are already shamefully undervalued. Creating art is a human process with the express intent of it being enjoyed by other humans. Having an algorithm do it is removing the most important part of art; the humanity.