Thousands of authors demand payment from AI companies for use of copyrighted works::Thousands of published authors are requesting payment from tech companies for the use of their copyrighted works in training artificial intelligence tools, marking the latest intellectual property critique to target AI development.

How can they prove that not some abstract public data has been used to train algorithms, but their particular intellectual property?

Well, if you ask e.g. ChatGPT for the lyrics to a song or page after page of a book, and it spits them out 1:1 correct, you could assume that it must have had access to the original.

Or at least excerpts from it. But even then, it’s one thing for a person to put up a quote from their favourite book on their blog, and a completely different thing for a private company to use that data to train a model, and then sell it.

Even more so, if you consider that the LLMs are marketed to replace the authors.

Yeah which I still feel is utterly ridiculous. I love the idea of AI tools to assist with things, but as a complete replacement? No thank you.

I enjoy using things like SynthesizerV and VOCALOID because my own voice is pretty meh and my singing skills aren’t there. It’s fun to explore the voices, and learn how to use the tools. That doesn’t mean I’d like to see all singers replaced with synthesized versions. I view SynthV and the like as instruments, not much more.

I’ve used LLVMs to proofread stuff, and help me rephrase letters and such, but I’d never hire an editor to do such small tasks for me anyway. The result has always required editing anyway, because the LLVMs have a tendency to make stuff up.

Cases like that I don’t see a huge problem with. At my workplace though they’re talking about generating entire application layouts and codebases with AI and, being in charge of the AI evaluation project, the tech just isn’t there yet. You can in a sense use AI to make entire projects, but it’ll generate gnarly unmaintainable rubbish. You need a human hand in there to guide it.

Otherwise you end up with garbage websites with endlessly generated AI content, that can easily be manipulated by third party actors.

you could assume that it must have had access to the original.

I don’t know if that’s true. If Google grabs that book from a pirate site. Then publishes the work as search results. ChatGPT grabs the work from Google results and cobbles it back together as the original.

Who’s at fault?

I don’t think it’s a straight forward ChatGPT can reproduce the work therefore it stole it.

Both are at fault: Google for distributing pirated material and OpenAI for using said material for financial gain.

Copyright doesn’t work like that. Say I sell you the rights to Thriller by Michael Jackson. You might not know that I don’t have the rights. But even if you bought the rights from me, whoever actually has the rights is totally in their legal right to sue you, because you never actually purchased any rights.

So if ChatGPT ripps it off Google who ripped it off a pirate site, then everyone in that chain who reproduced copyrighted works without permission from the copyright owners is liable for the damages caused by their unpermitted reproduction.

It’s literally the same as downloading something from a pirate site doesn’t make it legal, just because someone ripped it before you.

Can it recreate anything 1:1? When both my wife and I tried to get them to do that they would refuse, and if pushed they would fail horribly.

This is what I got. Looks pretty 1:1 for me.

Hilarious that it started with just “Buddy”, like you’d be happy with only the first word.

Yeah, for some reason it does that a lot when I ask it for copyrighted stuff.

As if it knew it wasn’t supposed to output that.

To be fair you’d get the same result easier by just googling “we will rock you lyrics”

How is chatgpt knowing the lyrics to that song different from a website that just tells you the lyrics of the song?

Two points:

-

Google spitting out the lyrics isn’t ok from a copyright standpoint either. The reason why songwriters/singers/music companies don’t sue people who publish lyrics (even though they totally could) is because no damages. They sell music, so the lyrics being published for free doesn’t hurt their music business and it also doesn’t hurt their songwriting business. Other types of copyright infringement that musicians/music companies care about are heavily policed, also on Google.

-

Content generation AI has a different use case, and it could totally hurt both of these businesses. My test from above that got it to spit out the lyrics verbatim shows, that the AI did indeed use copyrighted works for it’s training. Now I can ask GPT to generate lyrics in the style of Queen, and it will basically perform the song texter’s job. This can easily be done on a commercial scale, replacing the very human that has written these song texts. Now take this a step further and take a voice-generating AI (of which there are many), which was similarly trained on copyrighted audio samples of Freddie Mercury. Then add to the mix a music-generating AI, also fed with works of Queen, and now you have a machine capable of generating fake Queen songs based directly on Queen’s works. You can do the very same with other types of media as well.

And this is where the real conflict comes from.

-

there are a lot of possible ways to audit an AI for copyrighted works, several of which have been proposed in the comments here, but what this could lead to is laws requiring an accounting log of all material that has been used to train an AI as well as all copyrights and compensation, etc.

Not without some seriously invasive warrants! Ones that will never be granted for an intellectual property case.

Intellectual property is an outdated concept. It used to exist so wealthier outfits couldn’t copy your work at scale and muscle you out of an industry you were championing.

It simply does not work the way it was intended. As technology spreads, the barrier for entry into most industries wherein intellectual property is important has been all but demolished.

i.e. 50 years ago: your song that your band performed is great. I have a recording studio and am gonna steal it muahahaha.

Today: “anyone have an audio interface I can borrow so my band can record, mix, master, and release this track?”

Intellectual property ignores the fact that, idk, Issac Newton and Gottfried Wilhelm Leibniz both independently invented calculus at the same time on opposite ends of a disconnected globe. That is to say, intellectual property doesn’t exist.

Ever opened a post to make a witty comment to find someone else already made the same witty comment? Yeah. It’s like that.

I’d think that given the nature of the language models and how the whole AI thing tends to work, an author can pluck a unique sentence from one of their works, ask AI to write something about that, and if AI somehow ‘magically’ writes out an entire paragraph or even chapter of the author’s original work, well tada, AI ripped them off.

I think that to protect creators they either need to be transparent about all content used to train the AI (highly unlikely) or have a disclaimer of liability, wherein if original content has been used is training of AI then the Original Content creator who have standing for legal action.

The only other alternative would be to insure that the AI specifically avoid copyright or trademarked content going back to a certain date.

Why a certain date? That feels arbitrary

At a certain age some media becomes public domain

Then it is no longer copywrited

Personally speaking, I’ve generated some stupid images like different cities covered in baked beans and have had crude watermarks generate with them where they were decipherable enough that I could find some of the source images used to train the ai. When it comes to photo realistic image generation, if all the ai does is mildly tweak the watermark then it’s not too hard to trace back.

They can’t. All they could prove is that their work is part of a dataset that still exists.

You know what would solve this? We all collectively agree this fucking tech is too important to be in the hands of a few billionaires, start an actual public free open source fully funded and supported version of it, and use it to fairly compensate every human being on Earth according to what they contribute, in general?

Why the fuck are we still allowing a handful of people to control things like this??

Because the tech behind it isn’t cheap and money does not fall from trees.

No entity on the planet has more money than our governments. It’d be more efficient for a government to fund this than any private company.

Many governments on the planet have less money than some big tech or oil companies. Obviously not those of large industrious nations, but most nations aren’t large and industrious.

The government and efficiency don’t go together

Plenty of research shows that each dollar into government programs gets much more returns than private companies. This literally a neolib propaganda talking point.

There is nothing objectively wrong with your statement. However, we somehow always default to solving that issue by having some dragon hoard enough gold, and there is something objectively wrong with that.

Because we shy away from responsibility.

I think the longer response to this is more accurate. It’s more “because capitalism” than anything else.

And capitalism over the course of the 20th century made very successful attempts of alienating completely the working class and destroying all class consciousness or material awareness.

So people keep thinking that the problems is we as individuals are doing capitalism wrong. Not capitalism.

You think it is so simple you can just download it and run it on your laptop?

Someone should AGPL their novel and force the AI company to open source their entire neural network.

There is already a business model for compensating authors: it is called buying the book. If the AI trainers are pirating books, then yeah - sue them.

There are plagiarism and copyright laws to protect the output of these tools: if the output is infringing, then sue them. However, if the output of an AI would not be considered infringing for a human, then it isn’t infringement.

When you sell a book, you don’t get to control how that book is used. You can’t tell me that I can’t quote your book (within fair use restrictions). You can’t tell me that I can’t refer to your book in a blog post. You can’t dictate who may and may not read a book. You can’t tell me that I can’t give a book to a friend. Or an enemy. Or an anarchist.

Folks, this isn’t a new problem, and it doesn’t need new laws.

It’s 100% a new problem. There’s established precedent for things costing different amounts depending on their intended use.

For example, buying a consumer copy of song doesn’t give you the right to play that song in a stadium or a restaurant.

Training an entire AI to make potentially an infinite number of derived works from your work is 100% worthy of requiring a special agreement. This even goes beyond simple payment to consent; a climate expert might not want their work in an AI which might severely mischatacterize the conclusions, or might want to require that certain queries are regularly checked by a human, etc

My point is that the restrictions can’t go on the input, it has to go on the output - and we already have laws that govern such derivative works (or reuse / rebroadcast).

When you sell a book, you don’t get to control how that book is used.

This is demonstrably wrong. You cannot buy a book, and then go use it to print your own copies for sale. You cannot use it as a script for a commercial movie. You cannot go publish a sequel to it.

Now please just try to tell me that AI training is specifically covered by fair use and satire case law. Spoiler: you can’t.

This is a novel (pun intended) problem space and deserves to be discussed and decided, like everything else. So yeah, your cavalier dismissal is cavalierly dismissed.

No, you misunderstand. Yes, they can control how the content in the book is used - that’s what copyright is. But they can’t control what I do with the book - I can read it, I can burn it, I can memorize it, I can throw it up on my roof.

My argument is that the is nothing wrong with training an AI with a book - that’s input for the AI, and that is indistinguishable from a human reading it.

Now what the AI does with the content - if it plagiarizes, violates fair use, plagiarizes- that’s a problem, but those problems are already covered by copyright laws. They have no more business saying what can or cannot be input into an AI than they can restrict what I can read (and learn from). They can absolutely enforce their copyright on the output of the AI just like they can if I print copies of their book.

My objection is strictly on the input side, and the output is already restricted.

Makes sense. I would love to hear how anyone can disagree with this. Just because an AI learned or trained from a book doesn’t automatically mean it violated any copyrights.

The base assumption of those with that argument is that an AI is incapable of being original, so it is “stealing” anything it is trained on. The problem with that logic is that’s exactly how humans work - everything they say or do is derivative from their experiences. We combine pieces of information from different sources, and connect them in a way that is original - at least from our perspective. And not surprisingly, that’s what we’ve programmed AI to do.

Yes, AI can produce copyright violations. They should be programmed not to. They should cite their sources when appropriate. AI needs to “learn” the same lessons we learned about not copy-pasting Wikipedia into a term paper.

It’s specifically distribution of the work or derivatives that copyright prevents.

So you could make an argument that an LLM that’s memorized the book and can reproduce (parts of) it upon request is infringing. But one that’s merely trained on the book, but hasn’t memorized it, should be fine.

But by their very nature the LLM simply redistribute the material they’ve been trained on. They may disguise it assiduously, but there is no person at the center of the thing adding creative stokes. It’s copyrighted material in, copyrighted material out, so the plaintiffs allege.

They don’t redistribute. They learn information about the material they’ve been trained on - not there natural itself*, and can use it to generate material they’ve never seen.

- Bigger models seem to memorize some of the material and can infringe, but that’s not really the goal.

This is a little off, when you quote a book you put the name of the book you’re quoting. When you refer to a book, you, um, refer to the book?

I think the gist of these authors complaints is that a sort of “technology laundered plagiarism” is occurring.

Copyright 100% applies to the output of an AI, and it is subject to all the rules of fair use and attribution that entails.

That is very different than saying that you can’t feed legally acquired content into an AI.

However, if the output of an AI would not be considered infringing for a human, then it isn’t infringement.

It’s an algorithm that’s been trained on numerous pieces of media by a company looking to make money of it. I see no reason to give them a pass on fairly paying for that media.

You can see this if you reverse the comparison, and consider what a human would do to accomplish the task in a professional setting. That’s all an algorithm is. An execution of programmed tasks.

If I gave a worker a pirated link to several books and scientific papers in the field, and asked them to synthesize an overview/summary of what they read and publish it, I’d get my ass sued. I have to buy the books and the scientific papers. STEM companies regularly pay for access to papers and codes and standards. Why shouldn’t an AI have to do the same?

While I am rooting for authors to make sure they get what they deserve, I feel like there is a bit of a parallel to textbooks here. As an engineer if I learn about statics from a text book and then go use that knowledge to he’ll design a bridge that I and my company profit from, the textbook company can’t sue. If my textbook has a detailed example for how to build a new bridge across the Tacoma Narrows, and I use all of the same design parameters for a real Tacoma Narrows bridge, that may have much more of a case.

It’s not really a parallel.

The text books don’t have copyrights on the concepts and formulae they teach. They only have copyrights for the actual text.

If you memorize the text book and write it down 1:1 (or close to it) and then sell that text you wrote down, then you are still in violation of the copyright.

And that’s what the likes of ChatGPT are doing here. For example, ask it to output the lyrics for a song and it will spit out the whole (copyrighted) lyrics 1:1 (or very close to it). Same with pages of books.

The memorization is closer to that of a fanatic fan of the author. It usually knows the beginning of the book and the more well known passages, but not entire longer works.

By now, ChatGPT is trying to refuse to output copyrighted materials know even where it could, and though it can be tricked, they appear to have implemented a hard filter for some more well known passages, which stops generation a few words in.

Have you tried just telling it to “continue”?

Somewhere in the comments to this post I posted screenshots of me trying to get lyrics for “We will rock you” from ChatGPT. It first just spat out “Verse 1: Buddy,” and ended there. So I answered with “continue”, it spat out the next line and after the second “continue” it gave me the rest of the lyrics.

Similar story with e.g. the first chapter of Harry Potter 1 and other stuff I tried. The output is often not perfect, with a few words being wrong, but it’s very clearly a “derived work” of the original. In the view of copyright law, changing a few words here is not a valid way of getting around copyrights.

But you paid for the textbook

Libraries exist

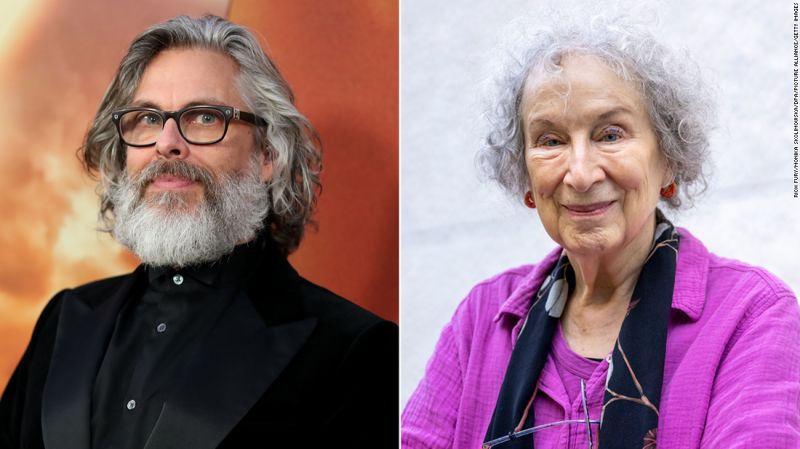

I think that these are fiction writers. The maths you’d use to design that bridge is fact and the book company merely decided how to display facts. They do not own that information, whereas the Handmaid’s Tale was the creation of Margaret Atwood and was an original work.

You have a point but there’s a pretty big difference between something like a statistics textbook and the novel “Dune” for instance. One was specifically written to teach mostly pre-existing ideas and the other was created as entertainment to sell to a wide an audience as possible.

Plagiarism filters frequently trigger on chatgpt written books and articles.

All this copyright/AI stuff is so silly and a transparent money grab.

They’re not worried that people are going to ask the LLM to spit out their book; they’re worried that they will no longer be needed because a LLM can write a book for free. (I’m not sure this is feasible right now, but maybe one day?) They’re trying to strangle the technology in the courts to protect their income. That is never going to work.

Notably, there is no “right to control who gets trained on the work” aspect of copyright law. Obviously.

There is nothing silly about that. It’s a fundamental question about using content of any kind to train artificial intelligence that affects way more than just writers.

I seriously doubt Sarah Silverman is suing OpenAI because she’s worried ChatGPT will one day be funnier than she is. She just doesn’t want it ripping off her work.

What do you mean when you say “ripping off her work”? What do you think an LLM does, exactly?

In her case, taking elements of her book and regurgitating them back to her. Which sounds a lot like they could be pirating her book for training purposes to me.

Quoting someone’s book is not “ripping off” the work.

How is it able to quote the book? Magic?

So you’re saying that as long as they buy 1 copy of the book, it’s all good?

No, I’m not saying that. If she’s right and it can spit out any part of her book when asked (and someone else showed that it does that with Harry Potter), it’s plagiarism. They are profiting off of her book without compensating her. Which is a form of ripping someone off. I’m not sure what the confusion here is. If I buy someone’s book, that doesn’t give me the right to put it all online for free.

I don’t know how I feel about this honestly. AI took a look at the book and added the statistics of all of its words into its giant statistic database. It doesn’t have a copy of the book. It’s not capable of rewriting the book word for word.

This is basically what humans do. A person reads 10 books on a subject, studies become somewhat of a subject matter expert and writes their own book.

Artists use reference art all the time. As long as they don’t get too close to the original reference nobody calls any flags.

These people are scared for their viability in their user space and they should be, but I don’t think trying to put this genie back in the bottle or extra charging people for reading their stuff for reference is going to make much difference.

So what’s the difference between a person reading their books and using the information within to write something and an ai doing it?

Because AIs aren’t inspired by anything and they don’t learn anything

So uninspired writing is illegal?

Language models actually do learn things in the sense that: the information encoded in the training model isn’t usually* taken directly from the training data; instead, it’s information that describes the training data, but is new. That’s why it can generate text that’s never appeared in the data.

- the bigger models seem to remember some of the data and can reproduce it verbatim; but that’s not really the goal.

What does inspiration have to do with anything? And to be honest, humans being inspired has led to far more blatant copyright infringement.

As for learning, they do learn. No different than us, except we learn silly abstractions to make sense of things while AI learns from trial and error. Ask any artist if they’ve ever looked at someone else’s work to figure out how to draw something, even if they’re not explicitly looking up a picture, if they’ve ever seen a depiction of it, they recall and use that. Why is it wrong if an AI does the same?

A person is human and capable of artistry and creativity, computers aren’t. Even questioning this just means dehumanizing artists and art in general.

Not being allowed to question things is a really shitty precedent, don’t you think?

Do you think a hammer and a nail could do anything on their own, without a hand picking them up guiding them? Because that’s what a computer is. Nothing wrong with using a computer to paint or write or record songs or create something, but it has to be YOU creating it, using the machine as a tool. It’s also in the actual definition of the word: art is made by humans. Which explicitly excludes machines. Period. Like I’m fine with AI when it SUPPORTS an artist (although sometimes it’s an obstacle because sometimes I don’t want to be autocorrected, I want the thing I write to be written exactly as I wrote it, for whatever reason). But REPLACING an artist? Fuck no. There is no excuse for making a machine do the work and then to take the credit just to make a quick easy buck on the backs of actual artists who were used WITHOUT THEIR CONSENT to train a THING to replace them. Nah fuck off my guy. I can clearly see you never did anything creative in your whole life, otherwise you’d get it.

Nah fuck off my guy. I can clearly see you never did anything creative in your whole life, otherwise you’d get it.

Oh, right. So I guess my 20+ year Graphic Design career doesn’t fit YOUR idea of creative. You sure have a narrow life view. I don’t like AI art at all. I think it’s a bad idea. you’re a bit too worked up about this to try to discuss anything. Not to excited about getting told to fuck off about an opinion. This place is no better than reddit ever was.

Of course I’m worked up. I love art, I love doing art, i have multiple friends and family members who work with art, and art is the last genuine thing that’s left in this economy. So yeah, obviously I’m angry at people who don’t get it and celebrate this bullshit just because they are too lazy to pick up a pencil, get good and draw their own shit, or alternatively commission what they wanna see from a real artist. Art was already PERFECT as it was, I have a right to be angry that tech bros are trying to completely ruin it after turning their nose up at art all their lives. They don’t care about why art is good? Ok cool, they can keep doing their graphs and shit and just leave art alone.

Obligatory xkcd: https://xkcd.com/827/

What did you pay the author of the books and papers published that you used as sources in your own work? Do you pay those authors each time someone buys or reads your work? At most you pay $0-$15 for a book anyway.

In regards to free advertising when your source material is used… if your material is a good source and someone asks say ChatGPT, shouldn’t your work be mentioned if someone asks for a book or paper and you have written something useful for it? Assuming it doesn’t hallucinate.

That’s the “paid in exposure” argument.

And I’m not sure what my company pays, but they purchase access to scientific papers and industrial standards. The market price I’ve seen for them is hundreds of dollars. You either pay an ongoing subscription to access the information, or you pay a larger lump sum to own a copy that cannot legally be reproduced.

Companies pay for this sort of thing. AI shouldn’t get an exception.

I think this is more about frustration experienced by artists in our society at being given so little compensation.

The answer is staring us in the face. UBI goes hand in hand with developments in AI. Give artists a basic salary from the government so they can afford to live well. This isn’t a AI problem this is a broken society problem. I support artists advocating for themselves, but the fact that they aren’t asking for UBI really speaks to how hopeless our society feels right now.

This is so stupid. If I read a book and get inspired by it and write my own stuff, as long as I’m not using the copyrighted characters, I don’t need to pay anyone anything other than purchasing the book which inspired me originally.

If this were a law, why shouldn’t pretty much each modern day fantasy author not pay Tolkien foundation or any non fiction pay each citation.

There’s a difference between a sapient creature drawing inspiration and a glorified autocomplete using copyrighted text to produce sentences which are only cogent due to substantial reliance upon those copyrighted texts.

All AI creations are derivative and subject to copyright law.

There’s a difference between a sapient creature drawing inspiration and a glorified autocomplete using copyrighted text to produce sentences which are only cogent due to substantial reliance upon those copyrighted texts.

But the AI is looking at thousands, if not millions of books, articles, comments, etc. That’s what humans do as well - they draw inspiration from a variety of sources. So is sentience the distinguishing criteria for copyright? Only a being capable of original thought can create original work, and therefore anything not capable of original thought cannot create copyrighted work?

Also, irrelevant here but calling LLMs a glorified autocomplete is like calling jet engines a “glorified horse”. Technically true but you’re trivialising it.

Yes. Creative work is made by creative people. Writing is creative work. A computer cannot be creative, and thus generative AI is a disgusting perversion of what you wanna call “literature”. Fuck, writing and art have always been primarily about self-expression. Computers can’t express themselves with original thoughts. That’s the whole entire point. And this is why humanistic studies are important, by the way.

I absolutely agree with the second half, guided by Ian Kerr’s paper “Death of the AI Author”; quoting from the abstract:

Claims of AI authorship depend on a romanticized conception of both authorship and AI, and simply do not make sense in terms of the realities of the world in which the problem exists. Those realities should push us past bare doctrinal or utilitarian considerations about what an author must do. Instead, they demand an ontological consideration of what an author must be.

I think the part courts will struggle with is if this ‘thing’ is not an author of the works then it can’t infringe either?

Courts already expressed themselves, and what they said is basically copyright can’t be claimed for the throw up AIs come up with, which means corporations can’t use it to make money or sue anyone for using those products. Which means generated AI products are a whole bowl of nothing legally, and have no identity nor any value. The whole reason commissions are expensive is that someone has spent money, time and effort to make the thing you asked of them, and that’s why corresponding them with money is right.

Also, why can’t AI be used to automatize the shit jobs and allow us to do the creative work? Why are artists and creatives being pushed out of doing the jobs only humans can do? Like this is the thing that makes me furious: that STEM bros are blowing each other in the fields over humans being pushed out of humanity. Without once thinking AI is much more apt at replacing THEIR jobs, but I’m not calling for their jobs to be removed. This is just a dystopic reality we’re barreling towards, and there are people who are HAPPY about humans losing what makes us human and speeding toward pure, total, complete misery. That’s why I’m emotional about this: because art is only, solely made by humans, and people create art to communicate something they have inside. And only humans can do that - and some animals, maybe. Machines have nothing inside. They are nothing, they are only tools. It’s like asking a hammer to write its own poetry, it’s just insane.

The trivialization doesn’t negate the point though, and LLMs aren’t intelligence.

The AI consumed all of that content and I would bet that not a single of the people who created the content were compensated, but the AI strictly on those people to produce anything coherent.

I would argue that yes, generative artificial stupidity doesn’t meet the minimum bar of original thought necessary to create a standard copyrightable work unless every input has consent to be used, and laundering content through multiple generations of an LLM or through multiple distinct LLMs should not impact the need for consent.

Without full consent, it’s just a massive loophole for those with money to exploit the hard work of the masses who generated all of the actual content.

The thing is these models aren’t aiming to re-create the work of any single authors, but merely to put words in the right order. Imo, If we allow authors to copyright the order of their words instead of their whole original creations then we are actually reducing the threshold for copyright protection and (again imo) increasing the number of acts that would be determined to be copyright protected

Machine learning algorithms does not get inspired, they replicate. If I tell a MLM to write a scene for a film in the style of Charlie Kaufman, it has to been told who Kaufman is and been fed alot of manuscripts. Then it tries to mimicks the style and guess what words come next.

This is not how we humans get inspired. And if we do, we get accused of stealing. Which it is.

Because a computer can only read the stuff, chew it and throw it up. With no permission. Without needing to practice and create its own personal voice. It’s literally recycled work by other people, because computers cannot be creative. On the other hand, human writers DO develop their own style, find their own voice, and what they write becomes unique because of how they write it and the meaning they give to it. It’s not the same thing, and writers deserve to get repaid for having their art stolen by corporations to make a quick and easy buck. Seriously, you wanna write? Pick up a pen and do it. Practice, practice, practice for weeks months years decades. And only then you may profit. That’s how it always was and it always worked fine that way. Fuck computers.

Removed by mod