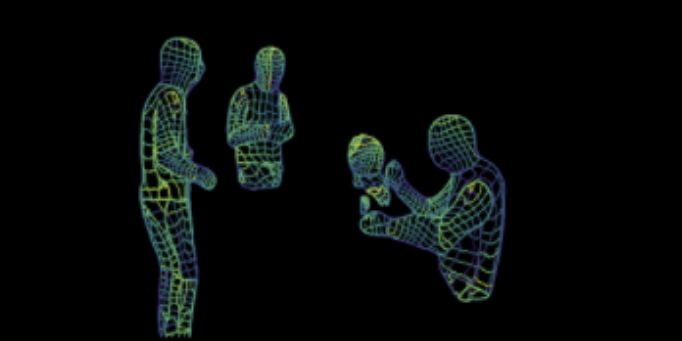

“We developed a deep neural network that maps the phase and amplitude of WiFi signals to UV coordinates within 24 human regions. The results of the study reveal that our model can estimate the dense pose of multiple subjects, with comparable performance to image-based approaches, by utilizing WiFi signals as the only input.”

You know what else let’s you see through walls? Windows. (Suck it, Linux users!)

In more than one sense, yes.

“we threw a deep neural network at the wall to see if it sticks”

Lead paint coming back into fashion.

I lick that 👍

Time to plaster your outer walls with fine wire mesh.

If I didn’t have a reason for why Ethernet is superior, I have one now!

Wasn’t this the plot point of The Dark Knight?

We only have to enter his name to be safe.Yes, but he specifically used cell signals.

Came here to say this!

Henceforth, the building code shall make mandatory that every room be perfectly grounded Faraday cages (/s).

Still, imagine lethal drones integrated with that technology (of course, they already have infrared, maybe even some adequate wavelength of X-rays).

Nevertheless, pretty cool to see how far we can take preexisting technology with the help of some deep learning layers.

Here’s what they’re putting in the goggles that Infantrymen wear now.

I don’t care to guess what the drones are packing.

Based on the cell phone reception in my house, I already suspect it’s a Faraday cage.

I am going to repeat myself forever it seems. We got it wrong when we decided that you only have privacy when someone can’t physically see what you are up to. Nothing else is treated this way. You are not allowed to drive as fast as your car can physically move. You are not allowed to go into anything locked as long as you are able to pick it. You are not allowed to steal whatever you want as long as no one tackles you for it. And yet somehow some way it became understood that merely because someone can get a photo of you they have the legal right to do so.

As if access to better technology means you should follow less moral rules vs the opposite. Someone with a junk camera of the 80s can do far less perving compared to the new cameras+drones out there.

Duh? I don’t think anyone with the right field of study thought this wasn’t possible. It just doesn’t have good use cases.

I’m an EE, and I have serious doubt about this actually working nearly as good as they are putting it. This sort of stuff is hard, even with purpose built radar systems. I’m working with angle estimation in Multipath environments, and that shit fucks your signals up. This may work it you have extremely precisely characterised the target room and walls, and a ton of stuff around it, and then don’t change anything but the motion of the people. But that’s not practical.

It’s Popular Mechanics, of course it doesn’t work as well as they say it does. But the theory has been around a long time.

Full body vr tracking without sensors?

The human presence sensors based on this are already on the consumer market, we just need to dial up the sensitivity.

There are already smart light bulbs you can buy off the shelf that use radio signals to see when somebody is in the room. Then it can turn on the lights automatically, without a camera or infrared sensor in the area.

Years ago there was a journal on gait recognition through home WiFi.

This article is a year old. Do we have posting standards in here?

Sorry, I didn’t notice the date when I posted. I can take it down if requested.

deleted by creator

deleted by creator

I wonder if it’s real time? If yes then this is good for VR.

I highly doubt it, here is something that might interest you on the topic

Interesting! It could theoretically act like a Kinect if it were advanced enough, and you wouldn’t need any additional hardware.

scientists bout to see me on the goddamned pot.

I now have no sense of privacy anymore